The EU AI Act is reshaping the landscape of AI development and deployment. This guide offers a practical approach to designing AI systems, balancing innovation with regulatory compliance.

As AI becomes increasingly prevalent in business and society, understanding and implementing EU AI Act compliance is crucial for organizations of all sizes.

Disclaimer: This article provides a high-level overview of the EU AI Act and its implications for AI system design. It is not legal advice. Readers are encouraged to consult with legal professionals and refer to official EU AI Act resources for the most up-to-date and accurate information.

Last updated: 1 October 2024. Please note that AI regulation is an evolving field. Check for the latest updates and information on this topic.

Core Principles and System Boundaries

Core Principles of the EU AI Act

The EU AI Act establishes a regulatory framework that classifies AI systems according to their risk levels. This tiered approach ensures that systems with higher risks, such as those in healthcare or public services, must comply with more stringent requirements compared to lower-risk applications. The Act’s primary goals include:

- Human Oversight: Requires human intervention capabilities in high-risk AI systems.

- Transparency: Users should be informed when interacting with AI, and the systems’ decisions must be explainable.

- Risk Management: AI models should undergo regular evaluations to minimize risks, including bias, security vulnerabilities, and instances of unequal treatment.

- Data Governance: Strict data handling protocols are enforced, particularly regarding GDPR compliance.

- Robustness and Accuracy: Systems must be resilient and meet predefined accuracy standards to ensure consistent performance.

Defining AI Systems and Boundaries

A crucial step in achieving compliance is defining the boundaries of an AI system. According to the EU AI Act, an AI system is described as:

“A machine-based system designed to operate with varying levels of autonomy that may exhibit adaptiveness after deployment, generating outputs such as predictions, recommendations, or decisions influencing physical or virtual environments based on input data.”

This definition aligns with the OECD’s 2024 framework but adopts a broader perspective, considering the entire system context instead of focusing solely on the AI model. The EU AI Act definition rather emphasizes the design of the AI system, reflecting its purpose of regulation and governance over the deployment of AI systems, with potential risks in mind.

This article specifically addresses compliance with the EU AI Act and relevant European regulations, without covering AI frameworks in other regions. However, readers outside the EU who follow the OECD’s 2024 framework are encouraged to align their AI regulatory objectives with the broader OECD definition.

Why Boundaries matter?

Here are some key reasons below:

- Regulatory Responsibility: The organization or individual placing the AI system on the market, or putting it into service, is the AI provider responsible for all parts of the system.

- Scope Precedes Classification: The accurate risk-based classification of an AI system can only be determined after a comprehensive understanding of its actual boundaries hence scope is achieved.

- End-to-End Accountability: Some regulatory standards apply across the entire system, not just individual components. This includes everything from the user interface to the data processing pipelines.

- Quality Management: Article 17 (a-f) of the EU AI Act directly applies to the elements constituting the AI system. The Quality Management System (QMS) covers a wide range of factors. These include technical aspects like the performance of the AI system, as well as organizational context, staff skills, internal audits, and management oversights. Together, these elements ensure the AI system operates efficiently and stays compliant with regulations.

Mapping AI System Architecture to Regulatory Requirements

Understanding Annex IV (2)

Annex IV (2) of the EU AI Act outlines the key requirements for AI systems, including transparency in decision-making, risk management procedures to minimize potential harm, and strict rules for handling and protecting data. To comply with the EU AI Act, the AI system architecture should follow the requirements defined in this Annex:

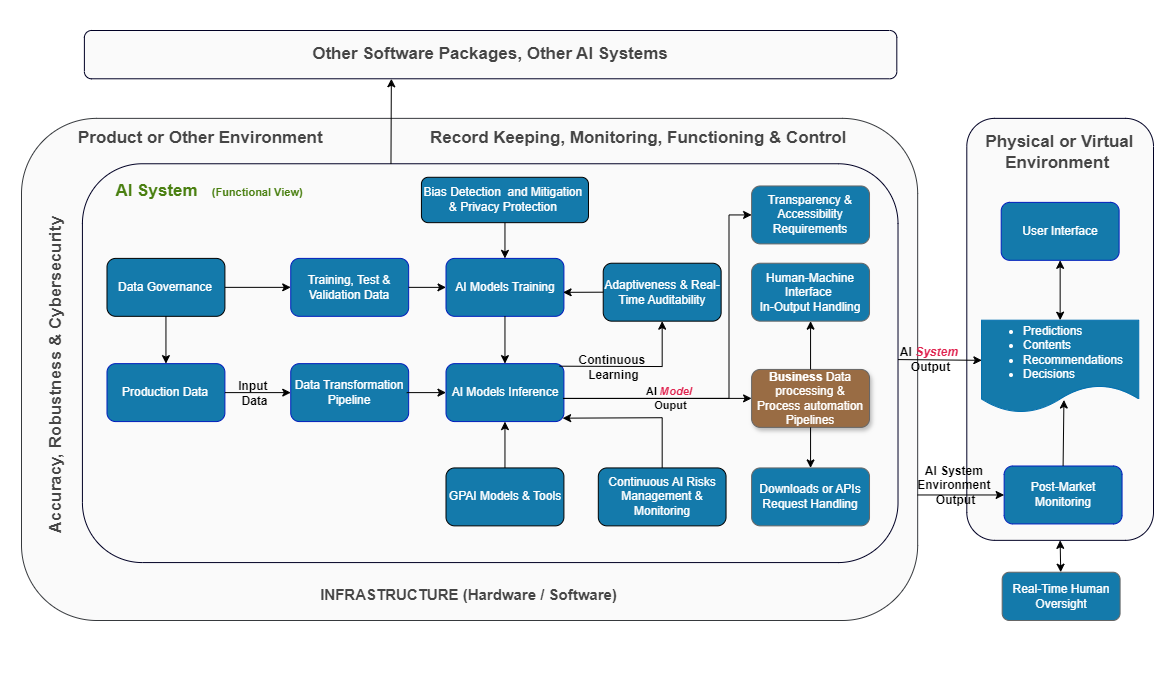

From Table 1, it is possible to derive a Reference Architecture that, after instantiation, will form the system architecture of an AI system depending on design and implementation options.

A reference architecture is a standardized template or blueprint that outlines the structure and components of a system, serving as a guide for designing and implementing solutions.

The Reference Architecture of an AI system in line with the regulation

The reference architecture should clearly define which components are within the boundary of the AI system and which are not. For example, even though infrastructure components like hardware or external software are not directly part of the AI system, they are still subject to the regulation (Annex IV(1b,1e)).

Figure 1 presents a functional view that does not make any assumptions about potential design and implementation choices. Solutions may be technical and/or organizational, and are context-dependent.

An AI system, at its core, is composed of various components that interact to generate predictions, contents, recommendations, and decisions. Designing an AI system that complies with the EU AI Act requires a clear understanding of these components and how they fit within the regulatory framework. Below, an outline of key elements of AI systems, as depicted in Figure 1, and how they align with the Act’s requirements:

1. AI Model Training and Inference:

- Core components generating predictions and recommendations integrated with data processing systems and user interfaces

- Regulatory Focus: Transparency in design and operation, especially for high-risk applications.

- Continuous learning capabilities with real-time auditability.

2. Data Governance and Transformation Pipelines

- End-to-end data lineage tracking.

- Transformation of raw data into suitable formats for AI models.

- Regulatory Focus: Strict data governance, ensuring GDPR compliance and bias mitigation.

- Robust privacy protection mechanisms.

3. Human Oversight and Transparency

- Human-AI collaboration framework with intuitive interfaces.

- Real-time human oversight capabilities.

- Regulatory Focus: Explainable AI decisions, allowing human intervention and correction.

- Transparency and accessibility features for clear outcome interpretation.

4. Continuous Risk Management and Monitoring

- Proactive risk assessment and mitigation strategies.

- Ongoing performance monitoring and adaptive models.

- Regulatory Focus: Continuous evaluation of AI system impact, especially in high-risk sectors.

5. Business Process Integration

- Automated pipelines for AI-driven decision support.

- API-based integration with existing software ecosystems.

- Regulatory Focus: Ensuring AI system outputs align with sector-specific regulations.

Technical Highlights

- Modular architecture supporting various AI models, including GPAI.

- Real-time transparency and explainability features.

- Bias detection and mitigation throughout the AI lifecycle.

The Overlooked Role of Post-Processing in AI Systems

A crucial but neglected aspect of AI systems is the post-processing of AI model outputs. Post-processing involves additional steps after the AI model generates its results. These steps adjust or refine the output before it is shown to users, ensuring it meets legal and ethical standards.

In many cases, post-processing includes:

- Bias mitigation: Adjusting AI-generated outputs to reduce bias and ensure fairness.

- Privacy protection: Ensuring that the AI’s output complies with privacy laws by removing sensitive or inappropriate data.

- Accessibility improvements: Making AI outputs more understandable for users through simplified explanations or actionable insights in accordance with Directives (EU) 2016/2102 and (EU) 2019/882.

Why Post-Processing Matters?

While the AI model generates predictions, contents, recommendations or decisions, the post-processing stage is where legal and ethical requirements truly come into play. For example, in a public welfare system, AI-generated decisions on benefit allocations may need to be adjusted to comply with local laws or reviewed by human administrators. Poor handling at this stage can introduce additional risks, such as bias or unfair treatment, which would violate the EU AI Act.

Evolving Perspectives on Compliance and Implementation

The proposed Reference Architecture is a personal attempt to design AI Systems that maintains regulatory compliance. However, it is worth noting that under Article 96(f), the commission has the authority to provide additional guidelines on how to practically implement the definition of an AI system outlined in Article 3(1).

How far those additional guidelines may amend the proposed Reference Architecture remains to see.

Maintaining Compliance Beyond Architecture

It is fair to recognize that compliance is more than just system architecture. For instance:

- Compliance Beyond Architecture: Ensuring alignment with the EU AI Act requires attention to operations, governance, and ethics throughout the AI system’s lifecycle.

- Cross-functional Collaboration: Compliance demands teamwork across technical, legal, and ethical domains, as transparency and bias mitigation extend beyond purely technical fixes.

- Ongoing Risk Management: Regular risk assessments and open communication with stakeholders are crucial to maintaining trust and accountability in AI systems.

- Agility in Action: Agile frameworks empower organizations to stay compliant with evolving regulations while fostering continuous innovation.

- Sustained Governance: Post-deployment monitoring, human oversight, and strong governance ensure that compliance remains an ongoing priority, not a one-time task.

Case Study: Low-Risk AI model for High-Risk AI System

Consider a system that processes personal information (PII) and uses AI-driven clustering to allocate state-related social benefits, similar to a welfare system. This example looks closely at the “Business Data Processing and Automation” part of the system described in Figure 1.

.

In the scenario depicted in Figure 2, an unsupervised AI model collects non-sensitive data like population density, city infrastructure, commercial activity, and real estate prices to create socio-economic profiles of different regions. Although simplified, this example reflects a real-world approach.

Since the model only processes aggregated, non-sensitive data, it would likely be classified as low-risk under the EU AI Act, with minimal transparency requirements, as its output isn’t directly user-facing.

However, a downstream module uses these AI-driven clusters to recommend state benefits based on an individual’s address, introducing personal data into the system and influencing decisions about public benefits.

Even though the core AI model handles non-personal data, the system as a whole—processing personal data and impacting critical decisions—would be classified as high-risk under Annex III (5a) of the EU AI Act. The system would need to explain how benefit recommendations were made, including the weighting of socio-economic factors.

This example highlights a key point: under the EU AI Act, the risk classification of an AI system depends on its overall impact and use, not just the core model. Even a seemingly low-risk model can elevate a system to high-risk status if it influences important decisions. This underscores the need for a holistic evaluation of AI systems, particularly regarding downstream applications.

In cases of non-compliance or overlooked risks, organizations may face fines or operational restrictions under the EU AI Act, especially in high-risk sectors such as healthcare, state benefits or finance.

Challenges and Considerations

Implementing the EU AI Act presents several challenges:

- Complexity: AI governance is a nuanced and complex field. This article provides a high-level overview, but in-depth understanding may require additional resources.

- How: Invest in comprehensive training programs for staff and potentially hire AI ethics and compliance specialists.

- Evolving Landscape: AI regulation is rapidly evolving. Stay informed about the latest updates and be prepared to adapt your systems.

- How: Establish a dedicated team or role for monitoring regulatory changes and update internal policies accordingly to stay current.

- Varied Impact: The Act may affect organizations differently based on their size and resources. Smaller entities and start-ups may need to seek additional support or resources for compliance.

- How: Smaller entities can seek industry partnerships, leverage open-source compliance tools, or consult with specialized AI compliance firms to manage resource constraints.

- Ethical Considerations: Beyond legal compliance, organizations should reflect on the broader ethical impact of their AI systems. Issues like fairness, bias, and privacy are crucial to public trust.

- How: Implementing ethics review boards and integrating ethical impact assessments into the AI development lifecycle can help address broader ethical implications.

- Balancing Innovation and Compliance: While ensuring regulatory compliance, it’s crucial not to stifle innovation.

- How: Seek proportionate responses to regulation. Organizations can adopt agile compliance frameworks that allow for rapid prototyping and testing within regulatory boundaries, fostering innovation while maintaining compliance.

Practical Examples of Regulatory Compliance in AI

Real-world AI innovations have demonstrated successful regulatory compliance. Below are three examples across different sectors:

- Google Health – AI Diagnostics : Google developed AI tools for medical imaging, particularly in radiology and ophthalmology, while ensuring compliance with healthcare regulations like patient safety and data protection laws.

- FICO – AI-Based Credit Scoring : FICO integrates AI into credit scoring systems and ensures compliance through published guidelines on transparency and bias mitigation.

- Amazon – Personalized Recommendation Engine : Amazon’s recommendation system complies with GDPR by maintaining transparency and protecting user data in personalization algorithms.

These examples illustrate that while regulatory compliance in AI can be complex, it is attainable through thoughtful design, transparency, and continuous monitoring.

Building Trustworthy AI Systems

As AI regulations evolve, several key insights emerge for building trustworthy, compliant AI systems:

- System Boundaries Matter: Defining AI system boundaries is essential for regulatory compliance.

- Post-Processing is Critical: Overlooked elements like post-processing can greatly influence an AI system’s compliance and risk profile.

- Flexibility is Key: AI architectures must adapt to evolving regulatory guidelines.

- Collaboration is Crucial: Successful implementation requires a mix of technical expertise and regulatory understanding.

Conclusion: Harnessing AI with Trust and Responsibility

Looking ahead, the EU AI Act offers an opportunity to build trust in AI technologies. By embracing the Act’s principles, AI providers and organizations can unlock AI’s full potential while safeguarding societal values.

To fully capitalize on these opportunities, organizations should consider the following key actions:

- Regularly review and adjust the boundaries of AI systems.

- Invest in flexible, compliant AI architectures.

- Engage in continuous education and cross-disciplinary collaboration.

- Contribute to the evolving discourse on AI governance.

- Balance regulatory compliance with innovation.

- Consider the global impact and diverse perspectives on AI governance.

Integrating ethical and responsible AI practices into system design can encourage innovation while safeguarding individual rights, leading to a balanced future for AI solutions.

Feedback and diverse perspectives on any part of this article are more than welcome.

No responses yet